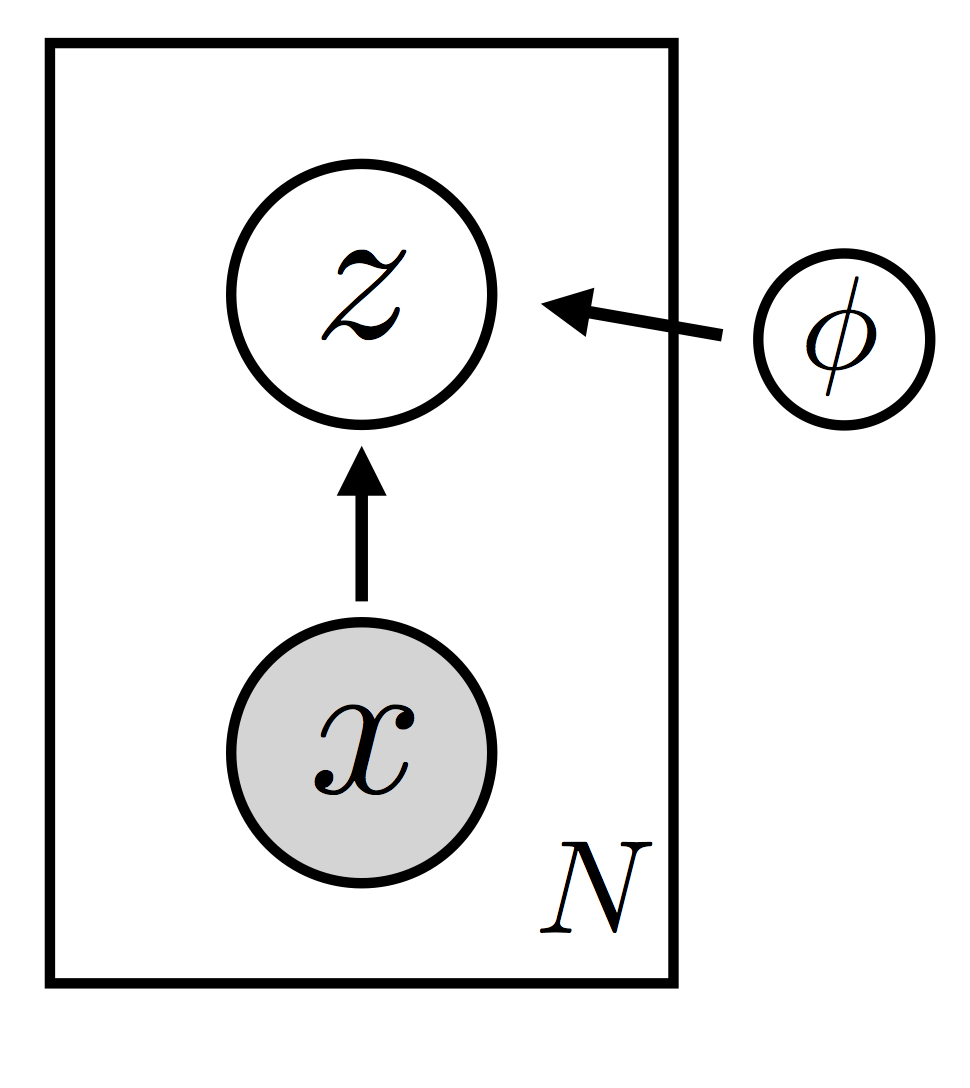

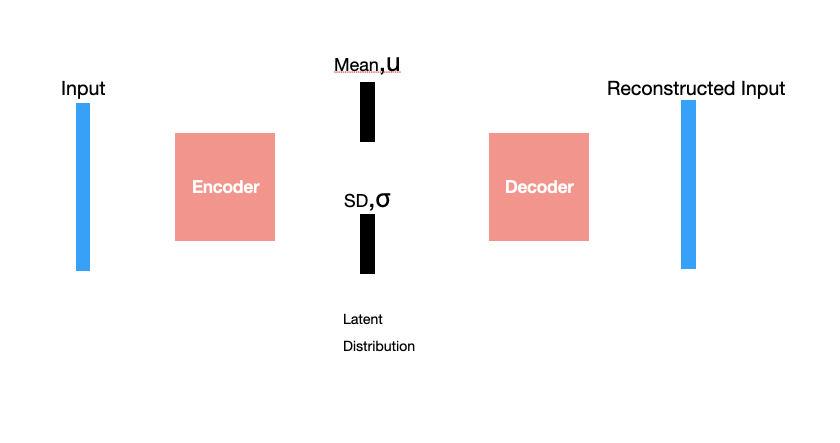

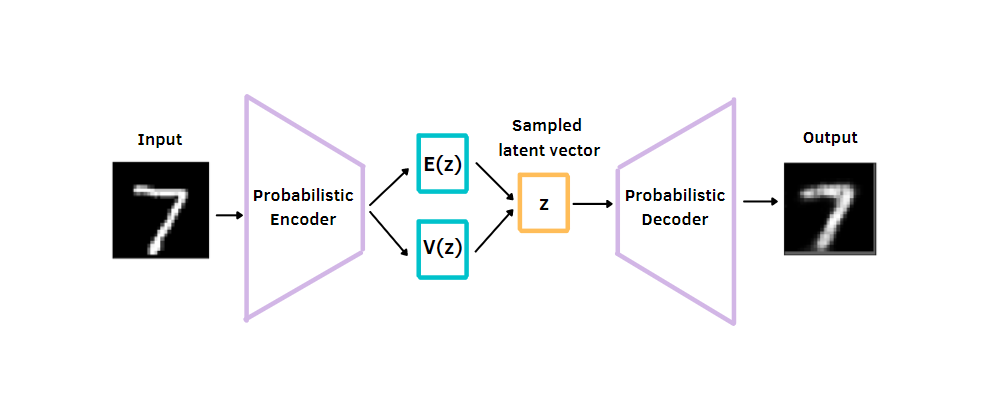

Pytorch Vae Example - 1 the log probability of z under the q distribution 2 the log probability of z under the p distribution. Sample mu epsilonsigma.

Face Image Generation Using Convolutional Variational Autoencoder And Pytorch

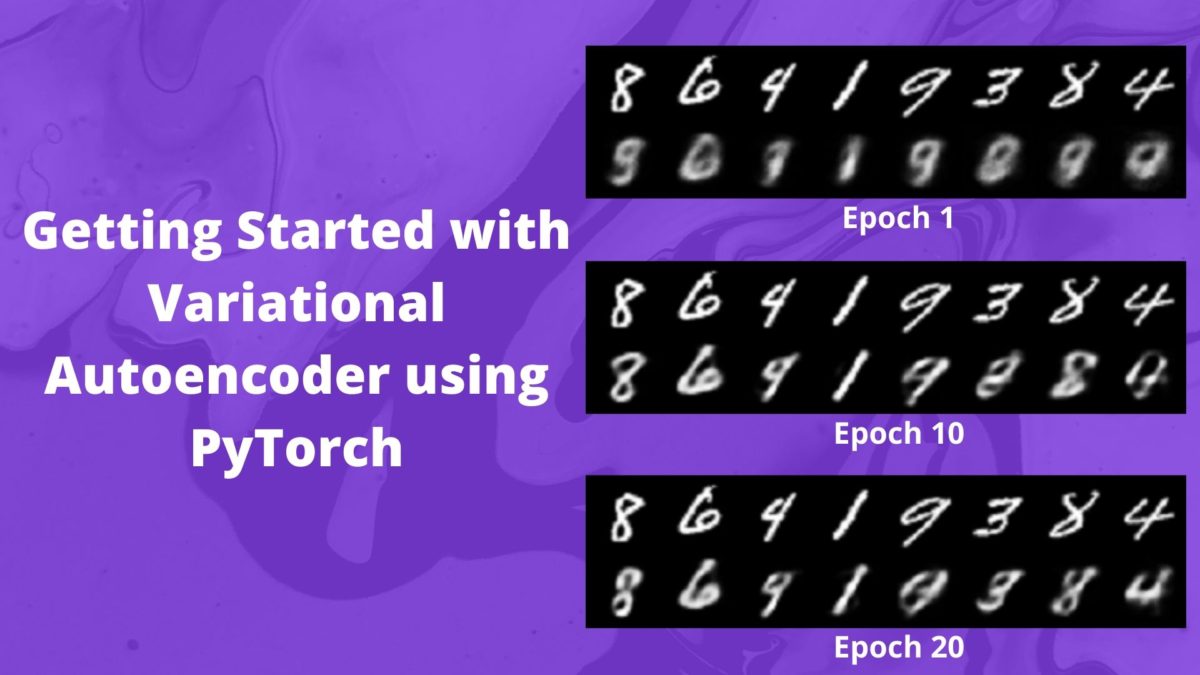

The training set contains 60 000 images the test set contains only 10 000.

Pytorch vae example. Like many PyTorch documentation examples the VAE example was OK but was poorly organized and had several minor errors such as using deprecated functions. I adapted pytorchs example code to generate Frey faces. Add_argument --batch-size type int default 128 metavar N help input batch size for training default.

To train these models we refer readers to the PyTorch Github repository. Notice that samples from each row are almost identical when the variability comes from a low-level layer as such layers mostly. Getting to know about the Frey Face dataset.

Add_argument --epochs type int default 10 metavar N help number of epochs to train default. Coding a Variational Autoencoder in Pytorch and leveraging the power of GPUs can be daunting. And we sample sigma and mu from the encoders ouput.

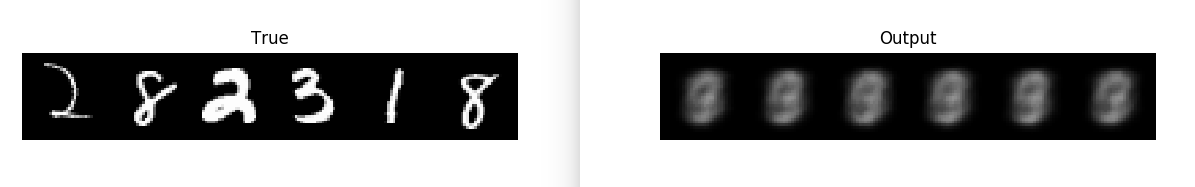

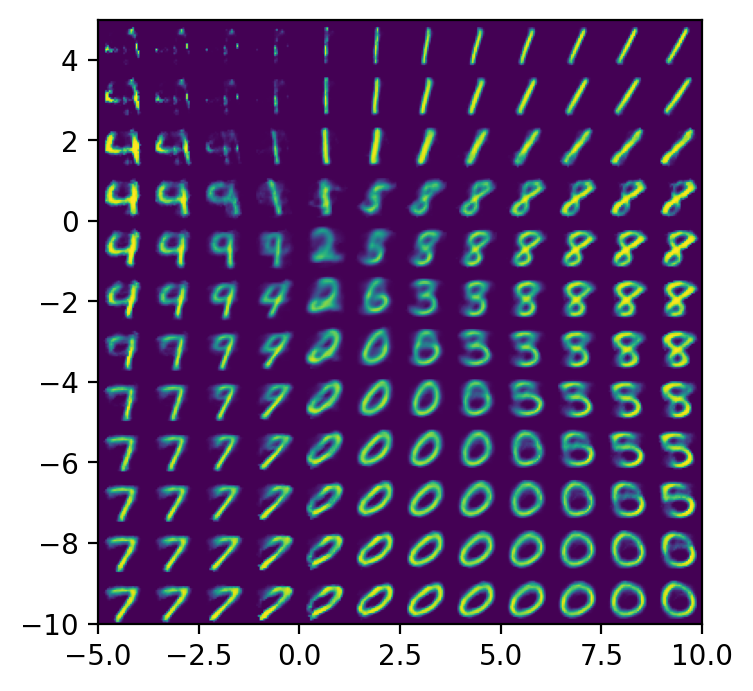

Since we are training in minibatches. We will work with the MNIST Dataset. The equations above yield S sample images conditioned on the same values of z for layers i1 to L.

Manual_seed 0 import torchnn as nn import torchnnfunctional as F import torchutils import torchdistributions import torchvision import numpy as np import matplotlibpyplot as plt. 0 cells hidden. Getting started with convolutional variational neural network on greyscale images.

All the models are trained on the CelebA dataset for consistency and comparison. Now the log likelihood of the full data point is given by. These S samples are shown in one row of the images below.

Fill in any place that says YOUR CODE HERE or YOUR ANSWER HERE. The architecture of all the models are kept as similar as possible with the same layers. The example generated fake MNIST images 28 by 28 grayscale images of handwritten digits.

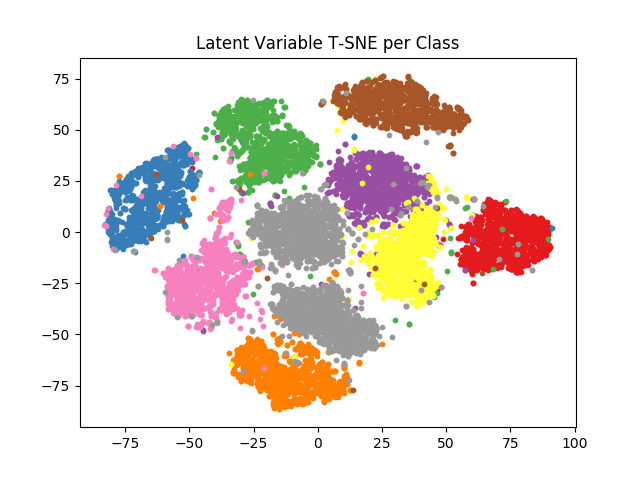

A collection of Variational AutoEncoders VAEs implemented in pytorch with focus on reproducibility. Topics deep-neural-networks deep-learning pytorch autoencoder vae deeplearning faces celeba variational-autoencoder celeba-dataset. It also means that if were running on a GPU the call to cuda will move all the parameters of all the submodules into GPU memory.

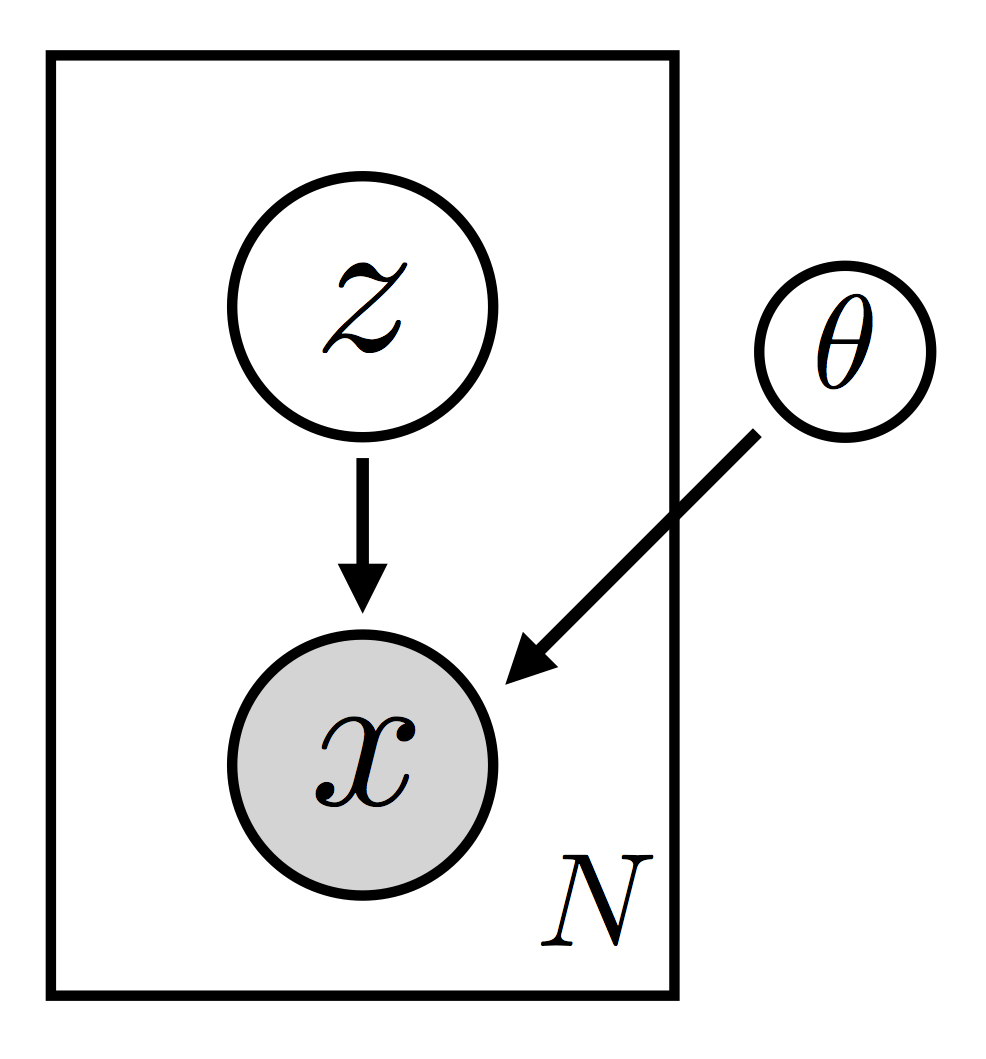

Example of vanilla VAE for face image generation at resolution 128x128 using pytorch. MathcalL_VAE mathcalL_R mathcalL_KL Finally we need to sample from the input space using the following formula. We will code the Variational Autoencoder VAE in Pytorch because its much.

In PyTorch the final expression is implemented by torchnnfunctionalbinary_cross_entropy with reductionsum. Posted on July 14 2017. We then instantiate the model and again load a pre-trained model.

Where s 1 S denotes the sample index. Implementing variational autoencoders using the PyTorch deep learning framework. 0 cells hidden.

Using the VAE network to train on the Frey Face dataset and generating new face images. The negative of it is commonly known as binary cross entropy and is implemented in PyTorch by torchnnBCELoss. Finally sample an image x given the latent variables Eq.

So for example when we call parameters on an instance of VAE PyTorch will know to return all the relevant parameters. ArgumentParser description VAE MNIST Example parser. Run subsequent cells to check your code.

This equation may look familiar. Below is an implementation of an autoencoder written in PyTorch. This was mostly an instructive exercise for me to mess around with pytorch and the VAE with no performance considerations taken into account.

This is a self-correcting activity generated by nbgrader. We apply it to the MNIST dataset. This is a minimalist simple and reproducible example.

RcParams figuredpi 200. Notice that z has almost zero probability of having come from p. Our VAE model follows the PyTorch VAE example except that we use the same data transform from the CNN tutorial for consistency.

Lets call this loss as mathcalL_KL. But has 6 probability of having come from q. Basic VAE Example github.

What will you learn in this tutorial. The network has the following architecture. The aim of this project is to provide a quick and simple working example for many of the cool VAE models out there.

Now that we have a sample the next parts of the formula ask for two things. So the final VAE loss that we need to optimize is. Frey Faces with the VAE in PyTorch.

Github Addtt Ladder Vae Pytorch Ladder Variational Autoencoders Lvae In Pytorch

Variational Autoencoders Pyro Tutorials 1 7 0 Documentation

Pytorch Vae Variational Autoencoder Example Not Training No Meaningful Model Returned Vision Pytorch Forums

Variational Autoencoders Pyro Tutorials 1 7 0 Documentation

Complete Guide To Build An Autoencoder In Pytorch And Keras By Sai Durga Mahesh Analytics Vidhya Medium

Variational Autoencoders Vae With Pytorch Alexander Van De Kleut

Github Podgorskiy Vae Example Of Vanilla Vae For Face Image Generation At Resolution 128x128 Using Pytorch

Github Bvezilic Variational Autoencoder Pytorch Implementation Of Variational Autoencoder Vae On Mnist Dataset

Variational Autoencoders Pyro Tutorials 1 7 0 Documentation

Variational Autoencoder With Pytorch By Eugenia Anello Dataseries Medium

Github Sashamalysheva Pytorch Vae This Is An Implementation Of The Vae Variational Autoencoder For Cifar10

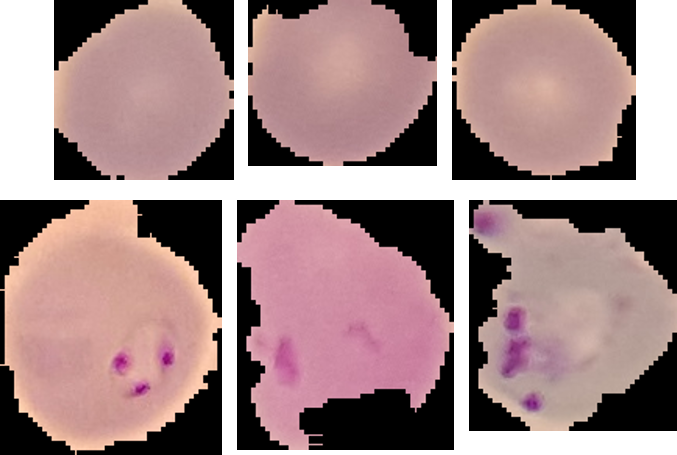

Using Pytorch To Generate Images Of Malaria Infected Cells By Daniel Bojar Towards Data Science

Getting Started With Variational Autoencoder Using Pytorch

Variational Autoencoders Vae With Pytorch Alexander Van De Kleut